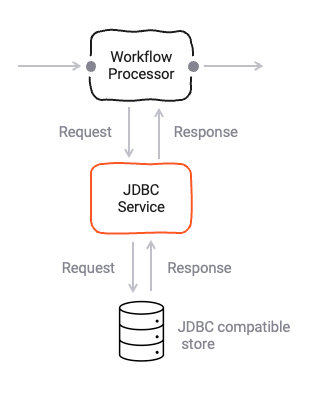

JDBC Service

Purpose

Define a service to interface with a JDBC data source.

This service is specialized for interacting with JDBC (Java Database Connectivity) compatible databases. It allows you to configure functions that can execute SQL queries and statements against a connected database. Classical RDBMS (Relational Database Management Systems) that are JDBC compatible are:

- Oracle Database: Widely used in enterprise environments, Oracle’s JDBC drivers support various versions of the database.

- MySQL: An open-source RDBMS that’s very popular for web applications. It has a well-supported JDBC driver.

- PostgreSQL: Another open-source RDBMS known for its advanced features and standards compliance. It also has robust JDBC support.

- Microsoft SQL Server: A widely used RDBMS in enterprise environments, with comprehensive JDBC driver support.

These databases are commonly used and have strong JDBC support, making them suitable for various applications. While they are only examples. Other RDBMS or backends supporting JDBC functions could be connected through this JDBC Service Asset as well.

Prerequisites

None

Configuration

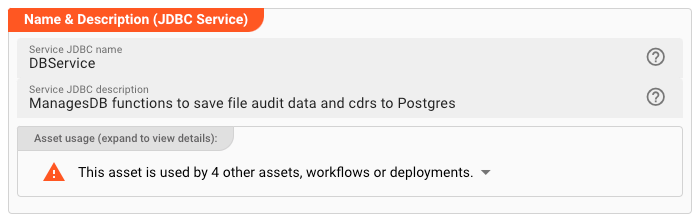

Name & Description

-

Name: Name of the Asset. Spaces are not allowed in the name. -

Description: Enter a description.

The Asset Usage box shows how many times this Asset is used and which parts are referencing it. Click to expand

and then click to follow, if any.

Required roles

In case you are deploying to a Cluster which is running (a) Reactive Engine Nodes which have (b) specific Roles

configured, then you can restrict use of this Asset to those Nodes with matching

roles.

If you want this restriction, then enter the names of the Required Roles here. Otherwise, leave empty to match all

Nodes (no restriction).

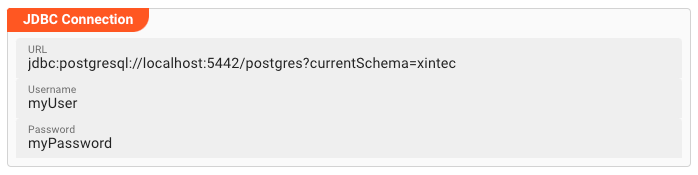

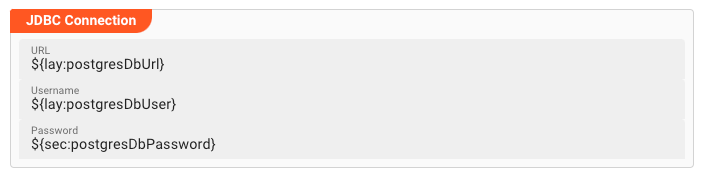

JDBC Connection

JDBC requires a connection which is requires an address, username and password.

-

URL: The URl on how to access the JDBC source. Consult with the data source on how this URL needs to be composed. It is exactly like you would use it in a tool like DBeaver for example. -

Username: Name of user to log in. -

Password: Associated password of the user.

You can use ${...} macros to expand variables defined in environment variables:

Service Functions

Services are accessed from other Assets via invocations of Functions.

This is where you define such functions.

In the context of JDBC, a Service Function encapsulates any valid DML (data manipulation) or even DDL (data definition)

statement.

Typically, you will be using INSERT, SELECT and UPDATE statements here.

Create Service Function

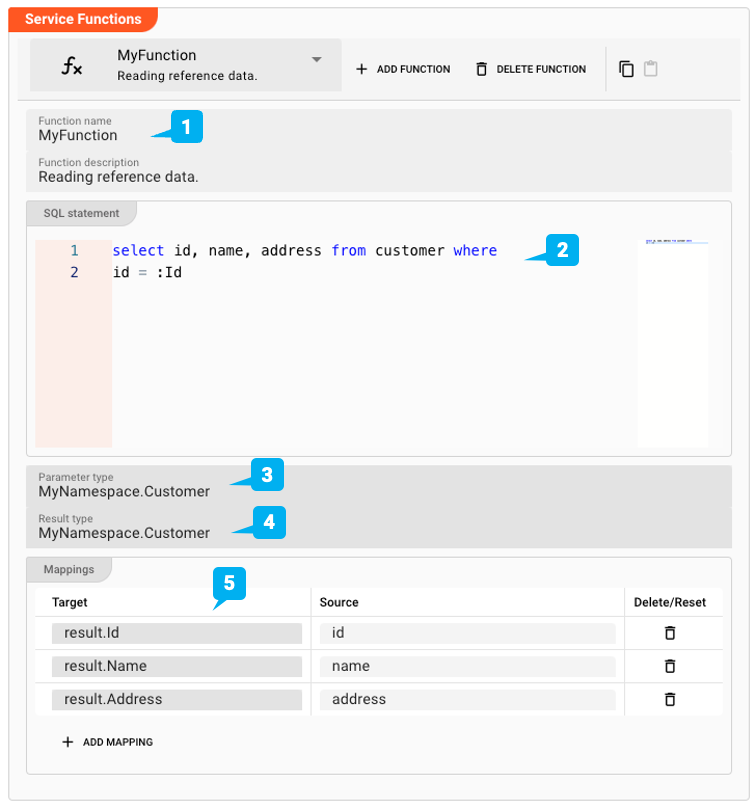

First create a new Function (1):

Next fill out the details:

-

Function name(1): The name of the function. Must not have whitespaces. -

Function description: Something which describes the function (optional). -

SQL Statement(2): The actual SQL-Statement to access execute against the JDBC data source. Please note the use of the:Idbind-variable in the example above. The variables you can use here, must have been defined in the data dictionary and assigned via theParameter type. See next section to learn how to do this. -

Parameter type(3): Reference to a data dictionary type which you must have defined below. All members of this type can be used as bind-variables in the SQL-Statement. -

Result type(4): Reference to a data dictionary type which you must have defined below. All members of this type can be used as result variables in the SQL-Statement. Note, that this can be the same type as used for theParameter type. In our example they share the same variables. -

Mappings(5): Define how you map the results from the SQL-Statement to yourResult Typedata structure. On the left you enter (assisted) the bind-variable names to which members of theResult Typeshould be mapped. Member names are always preceded withresult.and then followed by the member name. On the right hand side, enter the original field names used in your SQL-Statement.

Data Dictionary

The Data Dictionary allows us to define complex data structures which can be mapped onto JDBC data types and vice versa.

For this purpose, we need to define an internal custom data type which can receive the results of the statement, and/or

also provide data for other parts of the statement, e.g. for the where-clause as in our example.

As an example, let’s do this for the above SQL-Statement accessing the customer table. What do we need here?

- We are selecting three fields

ìd,nameandaddressfrom that table. So we need three corresponding fields in our own data dictionary that we can map these results to. - We query based on an

id(of the customer). We need to map an internal Id field from the data dictionary to thewhere-clause in the SQL-Statement. For this purpose we can use the same data structure as for 1., which already contains an Id field.

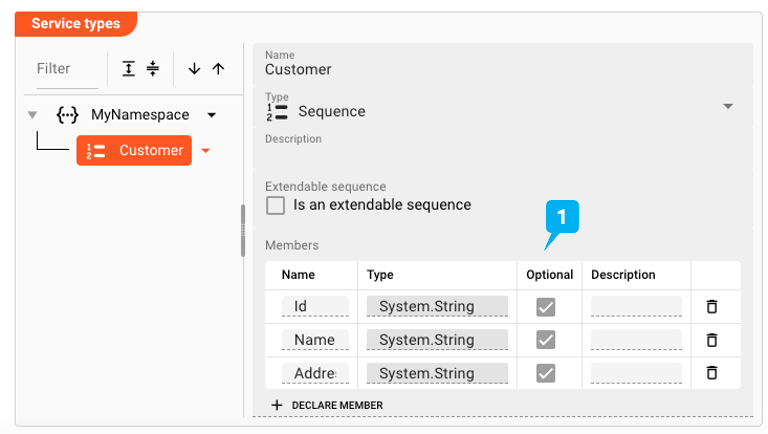

Let's define the necessary custom data type using the data dictionary:

- Declare a new type

- Declare namespace (optional)

- Declare Sequence

Customer

1. Declare a new type (1):

2. Declare namespace

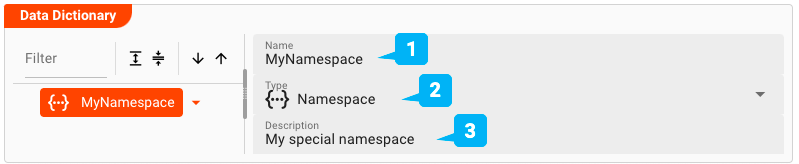

To better organize data types, we declare a namespace first (optional):

-

Name(1): The name of the element. If you are configuring a namespace, and you reuse the name of a namespace, which you have created elsewhere in this Project, then the elements of the namespaces will be merged into the namespace by this same name. Otherwise the name must be unique and may not contain spaces. -

Type(2): Pick the type of the element. In our example we first define a namespace. When we define additional elements under that namespace we will pick any of the other data types to actually hold the data. -

Description(3): Anything which describes the element further.

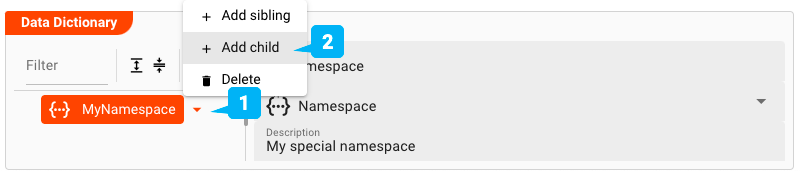

3. Declare Customer Sequence

Add a child to the namespace we just created:

- Click the small arrow next to the namespace name (1)

- Select

Add childto add a child element to the namespace - Fill in the details:

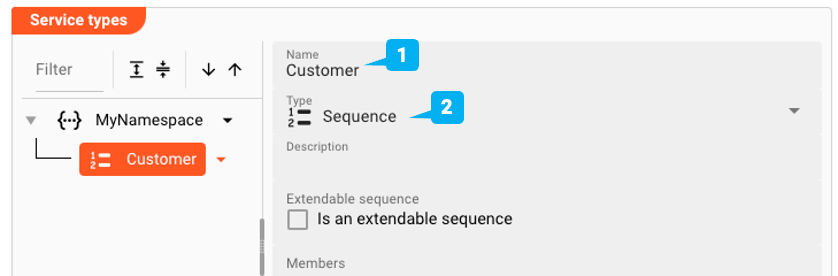

-

Name(1): Name the elementHistory -

Type(2): SelectSequenceas the element type. In the next step we will create individual members of the sequence. -

Extendable Sequence(3): Leave this unchecked for the example. If checked, it allows you and layline.io to dynamically extend the list of sequence members while working with the data type which we are defining. If - for example - your incoming data format has additional fields which are not defined in the sequence, the sequence will be automatically extended by these fields.

Now we add a list of member fields which make up the sequence (1):

To later reference the Name field, we can use the path MyNamespace.Customer.Name, and so forth.

Example: Using the JDBC Service

The JDBC Service can be used from within a JavaScript Asset. In our example we have a simple Workflow which reads a file with customer related data (1), then in a next step (2) reads corresponding customer date from a JDBC source, and simply outputs this data to the log. There is no other purpose in this Workflow than to demonstrate how to use the Service.

In the middle of the Workflow we find a JavaScript Processor by the name of “EnrichCustomer”. This Processor reads additional customer information from a JDBC compatible store using the JDBC Service.

How is it configured?

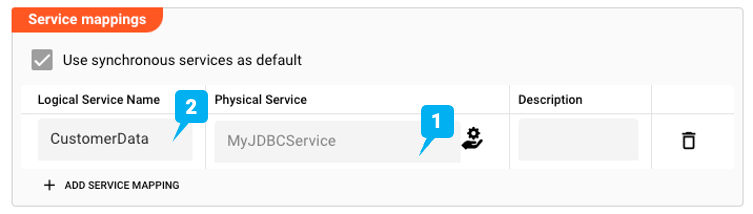

Link EnrichCustomer Processor to JDBC Service

To use the JDBC Service in the JavaScript Processor, we first have to assign the Service within the JavaScript Processor like so:

-

Physical Service(1): The JDBC Service which we have configured above. -

Logical Service Name(2): The name by which we want to use the Service within JavaScript. This could be the exact same name as the Service or a name which you can choose. Must not include whitespaces.

Access the Service from within JavaScript

Now let’s finally use the service within JavaScript:

Reading from JDBC Source

let jdbcData = null; // will receive a message type

let customer_id = 1234;

try {

// Invoke service function.

// Servcie access defined as synchronous. Therefore no promise syntax here

jdbcData = services.CustomerData.MyFunction(

{Id: customer_id}

);

// services: fixed internal term to access linked services

// CustomerData: The logical name of the service which we have given to it

// MyFunction: Collection function to read the customer data with the given customer_id

} catch (error) {

// handle error

}

// Output the customer data to the processor log

if (jdbcData && jdbcData.data.length > 0) {

processor.logInfo('Name: ' + jdbcData.data[0].Name);

processor.logInfo('Address: ' + jdbcData.data[0].Address);

} else {

processor.logInfo('No customer data found for customer ID ' + customer_id);

}

Note how the Service function returns a Message as a result type.

Since SQL-queries always return arrays, you can find the results in message.data as an array. If we are only expecting

one row as a result we can test it with jdbcData.data.length > 0 and access the first row with jdbcData.data[0].

Insert/Update to JDBC

Let's assume we also had defined a function WriteCustomerData which inserts a new customer:

insert into customer

values id = :Id, name = :Name, address = :Address;

We could then invoke this function and pass values to it like so:

try {

services.CustomerData.WriteCustomerData(

{

Id: 1235,

Name: 'John Doe',

Address: 'Main Street',

}

)

} catch (error) {

// handle error

}

It works the same for any other JDBC compliant statement.

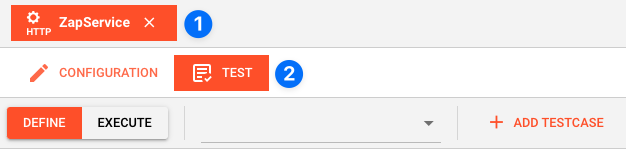

Service Testing

layline.io provides a test facility for testing your Services before you deploy them. In this way, you save time and effort by testing your Services without having to deploy and activate a whole Project with Workflows.

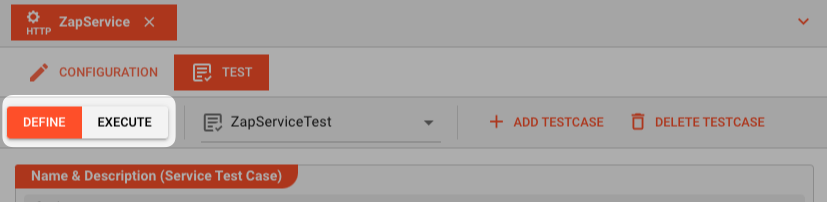

Once you have configured your Service(s), you can test them:

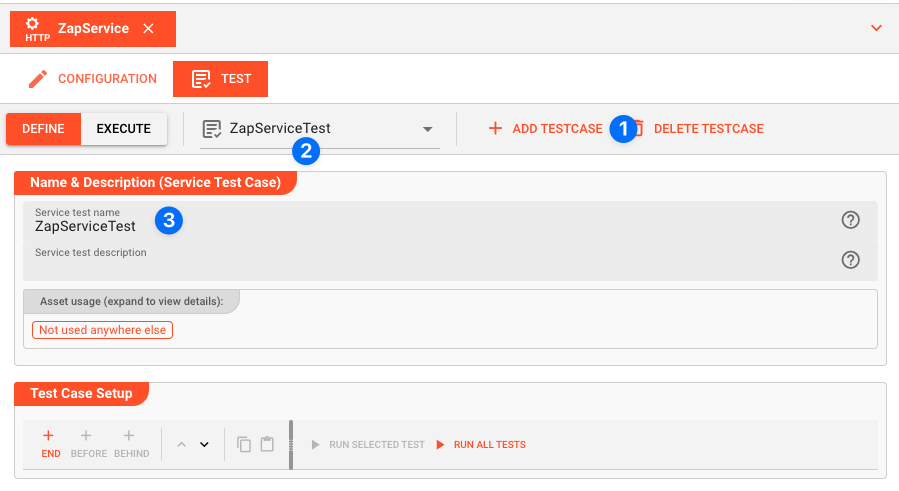

Within your Asset Configuration tab (1), switch to the Test tab (2) to test your Service.

Test Facility Toolbar

The toolbar provides the following options:

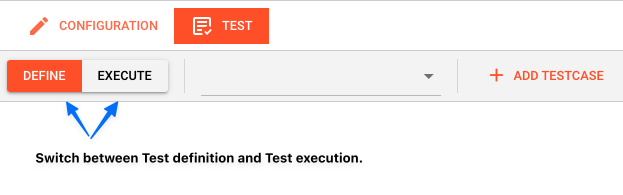

The Testing tab provides two major views:

- Testcase configuration: This is where you define the testcases to be executed.

- Testcase execution: This is where you can execute the testcases and see the results.

You switch between these two views by clicking on the leftmost icon in the toolbar (1).

Let's start with the Testcase configuration view.

Testcase Configuration

The concept of the Testing is to define a set of Testcases which can be executed in a batch or individually. For this purpose, you can define multiple Testcases and configure them individually. I.e. each Testcase groups a number of indidivual tests which can be executed individually or in a batch.

Adding a Testcase

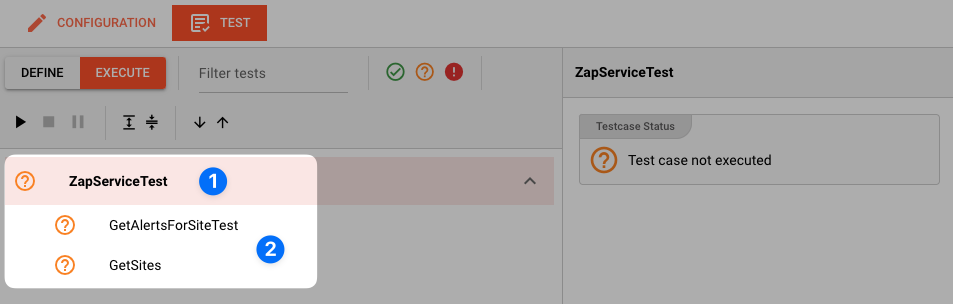

Click Add Testcase in the toolbar to add a new testcase:

A new Testcase is added.

It is automatically named New<Service Asset Name>Test (3) and added to the list of Testcases (2).

Service test name(3): You can change the name of the Testcase here.Service test description(4): You can add a description to the Testcase here.

Test Case Setup

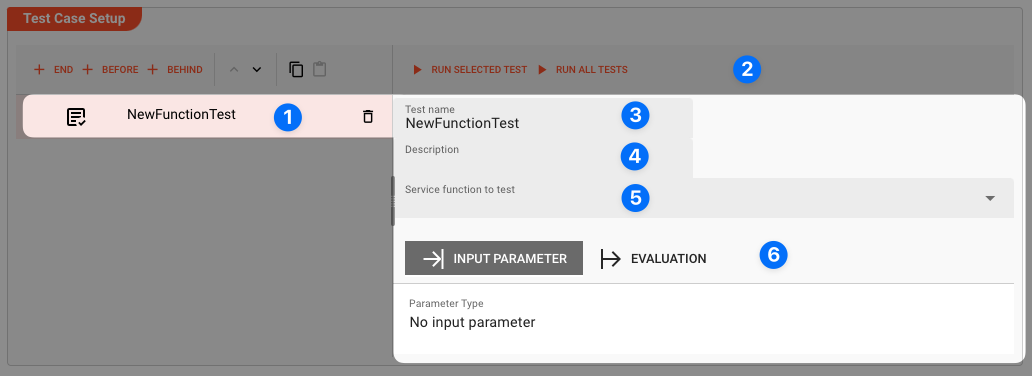

Basics

In this section you define the individual tests to be executed for this Testcase.

To start, click # END in the toolbar:

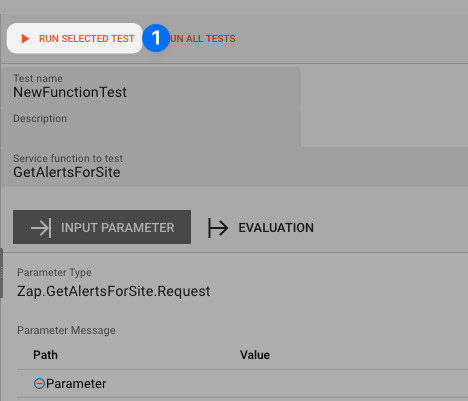

A new test is added to the list of tests (1), and the test is opened for configuration (2).

Next we fill in the details:

-

Test name(3): You can change the name of the Test here. -

Test description(4): You can add a description to the Test here. -

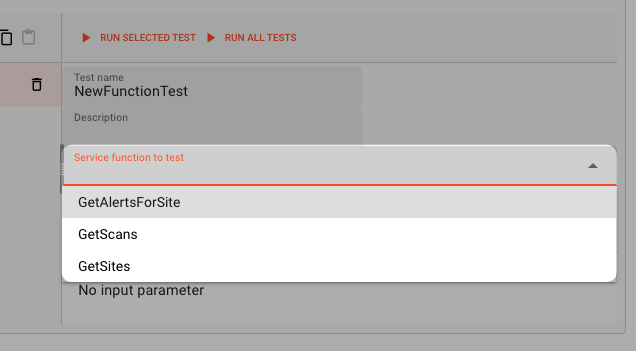

Service function to test(5): Select the Service function to test here.This list contains all Service functions which are defined in the Service Asset. Pick the one you want to test.

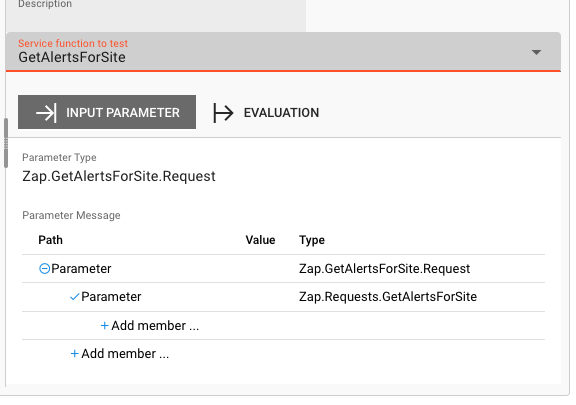

Once a Service function is selected, the system will automatically create a skeleton to fill in the respective parameters for the selected Service function.

Service Function Input Parameters

-

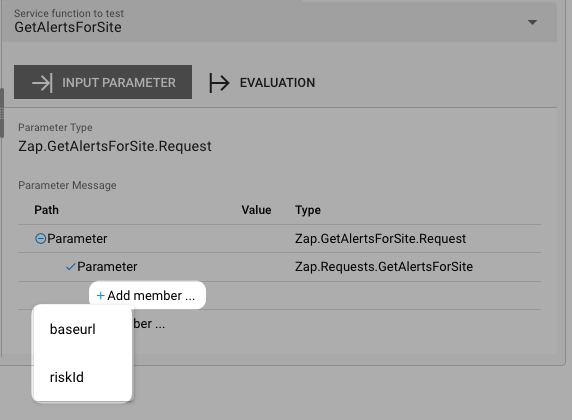

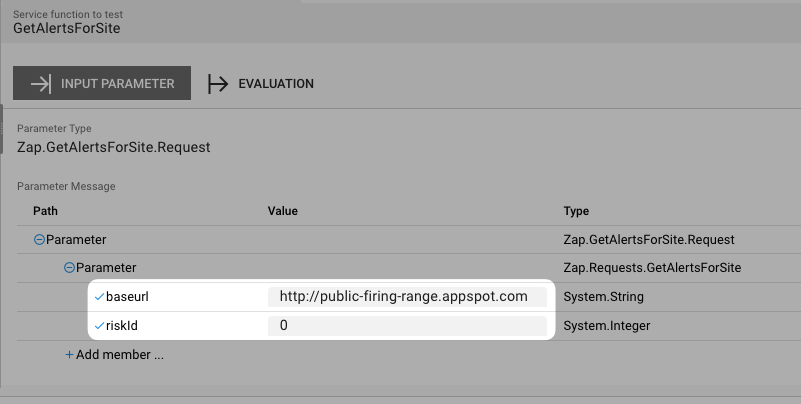

Service Function Input Parameters(6): Fill in the respective parameters for the selected Service function.In our example we have a function

GetAlertsForSitewhich takes two parametersbaseurlandriskId. If we click onAdd memberin the skeleton table the system will allow you to select the respective parameter from the list of available parameters:

Once you have selected the parameter, the system will automatically add the respective parameter name. You then add the respective value for the parameter:

Service Function Evaluation Parameters

To automatically evaluate the result, you can add a script which analyzes the results.

Testcase Execution

Once you have configured your Testcases, you can execute them.

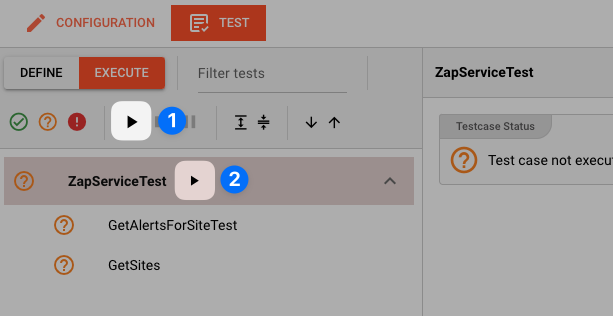

There are two ways on how to trigger execution:

-

Option 1: Select

Run selected testin the toolbar (1) to execute the currently selected Testcase.

Executing a test this way will switch the tab to the Testcase execution view, execute the test and show the results.

-

Option 2: Switch to the Testcase execution view by clicking on the leftmost icon in the toolbar (1) select the test to execute, and then hit the

playbutton next to the test.

Each option will take us to the Testcase execution view:

In this view you can find the Testcase (1) and the Tests (2) we have created.

If we had created additional tests for this Testcase, they would be listed here as well.

Question marks indicate that the test has not yet been executed.

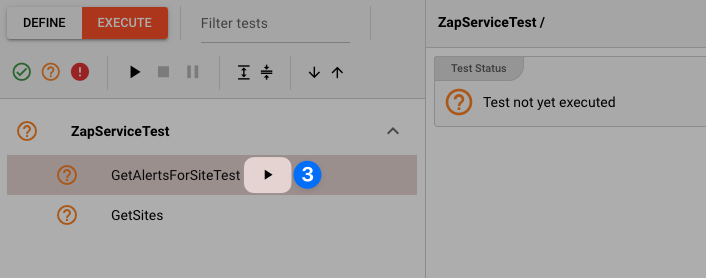

We can now either execute all tests, or run them individually:

-

Run all Tests(1): Click this button to execute all tests. -

Run Testcase(2): Click this button to a Testcase with all its underlying individual tests.

-

Run individual Test(3): Click this button next to a test to execute this individual test.

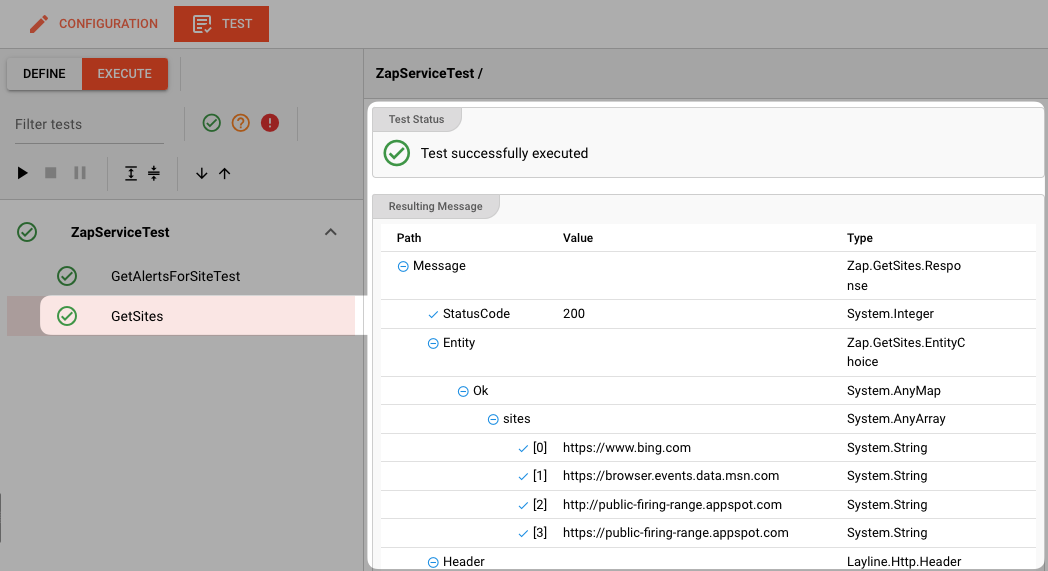

Once a test has been executed, the question mark will be replaced by a green check mark or a red cross depending on whether the test was successful or not.

The right hand-panel will show the results of the test execution respectively:

In case of errors, the system will show the error message for further investigation.

Please note, that the creation of the online documentation is Work-In-Progress. It is constantly being updated. should you have questions or suggestions, please don't hesitate to contact us at support@layline.io .